Let’s see learn about Kubernetes Overview in this article.

Table of Contents

What is Kubernetes

Kubernetes is one of the most popular technologies in DevOps. First, it was created to solve container orchestration problems on a high scale or in mission-critical applications. Nowadays, it has shown an incredibly fast adoption in the Enterprise sector.

While developing any application, codes are divided into multiple parts for easy and quick deployment. Each code and its dependent functionalities are wrapped into a single unit called a container. It is an open-source platform that is used to manage containerized workloads and services to automate application development.

Released in June 2014, It’s ecosystem included a package manager, dozens of open-source distributions, great monitoring tools, K8s products from all major Сloud providers, and ArgoCD. Kubernetes provides complex features such as container discovery, CNI, pod tainting, stateful sets, auto scalers, and others in a declarative way. All of the above has made it the tool of choice for most distributed applications.

Architecture

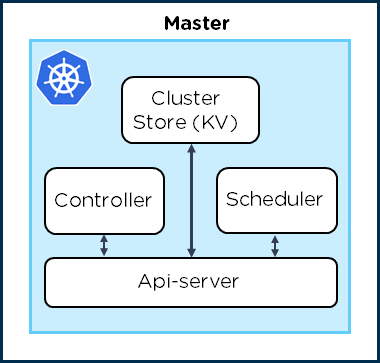

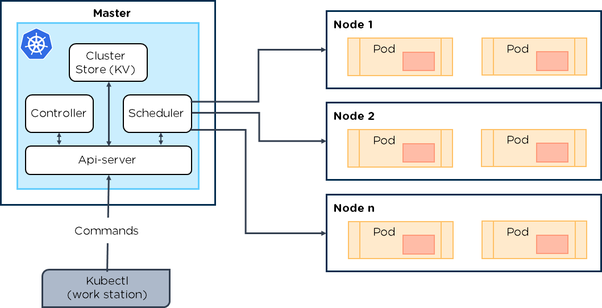

It follows the Master and Worker architecture. Master is responsible for all the heavy lifting and assigns tasks to workers or just nodes.

Master Components:

API Server: This component on the master exposes the Kubernetes API. It is the front end of the Kubernetes control plane. It means that all the requests that we as a user send using kubectl (we will install this in the below section) or any other services sent inside of it is handled by API Server and then forwarded to other appropriate components.

Controller: It helps in maintaining the desired state of your cluster. In this, we use declarative language, which means that we do not tell Kubernetes each and every step of how to do something, we only tell we want this state, and It takes care of all the underlying implementation of how to achieve it. Whenever there is a change between the desired state and the current state of the cluster, the controller kicks in again making the current state similar to the desired state. Also, the controller consists of many other controllers like:

- Node Controller: Responsible for noticing and responding when nodes go down.

- Replication Controller: Responsible for maintaining the correct number of pods for every replication controller object in the system.

- Any many more…

Scheduler: It watches API server for newly created pods that have no node assigned, and selects a node for them to run on. Basically, it schedules pods onto nodes.

etcd: This is persistent storage that stores all cluster data. Always have a backup plan for etcd’s data for your Kubernetes cluster. If etcd is gone your whole cluster goes. There’s no way to get your Kubernetes Cluster back if you lose all your etcd data.

Worker Components:

Kubelet: An agent that runs on each node in the cluster. It does the following tasks:

- Makes sure that containers are running in a pod.

- Reports back to master

- Register nodes with cluster

- Watches api-server.

Kube Proxy: It takes care of Kubernetes networking. It enables the Kubernetes service abstraction by maintaining network rules on the host and performing connection forwarding.

Container Runtime: It is responsible for running containers. It supports multiple runtimes for example Docker and rkt.

Benefits

- Service discovery and load balancing It can expose a container using the DNS name or using its own IP address. If traffic to a container is high, Kubernetes is able to load balance and distribute the network traffic so that the deployment is stable.

- Storage orchestration allows you to automatically mount a storage system of your choice, such as local storage, public cloud providers, and more.

- Automated rollouts and rollbacks You can describe the desired state for your deployed containers using It, and it can change the actual state to the desired state at a controlled rate. For example, you can automate Kubernetes to create new containers for your deployment, remove existing containers and adopt all their resources to the new container.

- Automatic bin packing You provide Kubernetes with a cluster of nodes that it can use to run containerized tasks. You tell Kubernetes how much CPU and memory (RAM) each container needs. It can fit containers onto your nodes to make the best use of your resources.

- Self-healing It restarts containers that fail, replaces containers, kills containers that don’t respond to your user-defined health check, and doesn’t advertise them to clients until they are ready to serve.

- Secret and configuration management It let you store and manage sensitive information, such as passwords, OAuth tokens, and SSH keys. You can deploy and update secrets and application configuration without rebuilding your container images, and without exposing secrets in your stack configuration.

Advantages and Disadvantages

Advantages

- Write Dockerfiles + manifests once, run anywhere (on EKS, GKE, Tectonic, whatever Azure has). Very little vendor lock-in.

- Easy to plug third-party services into your cluster (ex, spin up replicated MySQL in seconds)

- Hosted Kubernetes (ex, GKE) makes “productionizing” applications dead simple:

- Simple horizontal (number of pods) and vertical (single pod) scaling

- Failed pods restart automatically

- Access controls built-in

- Logs and monitoring automatically wired in

- You can transition into serverless applications on your Kubernetes cluster using knative.

- It is winning; it is unlikely you’ll have picked the wrong horse.

Disadvantages

- Virtualization isn’t perfect; for high-performance applications, it does matter where your application runs.

- Pods with high network utilization will impact co-located pods

- Pods with high disk IO will impact pods using the same volume.

- It is a Work In Progress: there will be bugs and incomplete features. You will need to update frequently to pick up important features and bug fixes.

- The jury is out on whether it is the future or a brief stepping-stone on the way to full serverless computing.

Reference:

https://kubernetes.io/docs/home/

Hope this article helps!